Newton vs Einstein: it gets physical

Newtonian physics ruled the mechanical world for a couple of centuries. It was simple, elegant, almost intuitive.

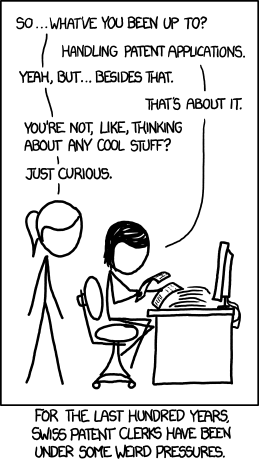

Then, early twentieth century, an obscure swiss patent clerk, (Einstein if that rings a bell) demonstrated that it did not work at larger scale and it needed adjustments. It was a daring theory, somewhat far-fetched but it has been proven right every time since.

Then a couple of decades later, quantum mechanics wreaked havoc at the smaller scale, demonstrating that anything goes! It was counter intuitive, unfathomable and it is still. particles are probabilistic, you have to choose between knowing their location or their speed…

But Newtonian mechanics still rules our day-to-day life, because it is accurate enough to account for our experiences. But serious physicists need either Relativity or Quantum mechanics to dig deeper at non human scale. And to this day, nobody has been able to reconcile quantum physics and relativity.

What about agility?

Agile values are really people centric. Indeed, when I was first introduced to them, I thought this was a great way to reconcile users with software developers. But beyond that, agile inspired methodologies focus on adaptability whereas traditional project management method focus on careful planning.

But careful planning is only as good as what you use to establish your plan, traditional approaches require a lot of up front information to succeed, a requirements that is difficult to fulfill for software development.

Instead, Agile focuses on getting the best out of the information you have and the central notion of maximizing information before using it trough the notion of ‘last responsible moment’.

There is no question Agile inspired methodologies had great success and help improve software project success rates.

But none of them scale.

Smaller

They do not scale down: agile method will not help you design a faster sort algorithm or a Sudoku solver.

I mean, I am not sure how individual interactions would help there.

There, design principles, previous works, patterns are the tool you will need.

Agile methodologies have no relevance there.

Larger

They do not scale up either: being focus on people is great for small groups, but how to scale them for hundred, thousands or even larger groups? How do you engage C-level stakeholders? How do you make those organizations embrace change?

That is where you need Enterprise Architecture practices. As it is often the case with any tool or methodology, there are many ways you can fail with those. But a key attributes to success is to have the right attitude, being a facilitator.

But describing Enterprise Architecture is beyond the scope of this post.

As a conclusion

Trying to change a large organization/IS using some agile methodology is akin to trying to use quantum physics to describe a car. Yes any matter is made out of sub-atomic particles, but you will simply not succeed because it is too complex.

Understand that Agility and Enterprise Architecture are related in their objectives, but at very different scale.

Adopt both, and use accordingly.

Repeat and succeed.

Atari 520 ST Motherboard

Atari 520 ST Motherboard